Python Package Documentation - External API

This chapter provides information on eCognition's external Python API. This external package is accessible based on the Python program itself by installing the ecognitionapi package using the appropriate wheel package.

Installation files for external Python API - eCognition wheel packagesPython versions supported for the ecognitionapi are versions 3.8 to 3.13.

Commands for installation of wheel packages:

python -m pip install ecognitionapi --extra-index-url https://ecognition-wheels.trimblegeospatial.com/

pip install ecognitionapi --extra-index-url https://ecognition-wheels.trimblegeospatial.com/

Exemplary Python scripts - see below.

This chapter covers only the external API - an embedded eCognition API is provided based on the algorithm 'python script' using Python files in inline scripts. See Embedded Python API Reference.htm.

Class EcognitionApi

Class for eCognition engine API. Used to create and analyze eCognition projects via rule sets.

Special methods EcognitionApi

__init__(log_file_path, license_server)

Initialize and start the eCognition engine.

__enter__()

A method used for EcognitionApi context management. Enables the use of EcognitionApi in a with statement.

__exit__(*args)

Used for EcognitionApi context management. This method will call EcognitionApi.shutdown() method, when exiting a with statement.

Methods EcognitionApi

shutdown()

Shutdown the eCognition engine.

version()

eCogntion engine version.

add_image(img_path, layer_name, map_name)

Add an image to the eCognition project.

add_thematic(thm_path, attr_path, layer_name, map_name)

Add a thematic layer to the eCognition project.

add_point_cloud(pc_path, layer_name, map_name)

Add a point cloud to the eCognition project.

get_point_cloud_classes(layer_name, map_name)

Get a list of point cloud classes.

add_camera_image(camera_images, camera_info, layer_name, map_name)

Add camera images with camera information to the eCognition project.

create_project()

Create an eCognition project. Must be called before EcognitionApi.analyze can be called.

create_project_set_size(llx, lly, res, size_x, size_y)

Create a project (main map) with specified position, resolution and size.

save_project(project_path)

Save an eCognition project to a specified path.

close_project()

Close an eCognition project and release used memory.

load_ruleset(ruleset_path)

Load rule set to the eCognition project.

analyze(process_id)

Run an analysis using the loaded rule set.

get_variable_value_float(variable_name)

Get a float variable value from the eCognition project.

get_variable_value_str(variable_name)

Get a string variable value from the eCognition project.

set_variable_value(variable_name, variable_value)

Set a variable value in the eCognition project.

get_array_float(array_name)

Get a float array from the eCognition project.

set_array(array_name, array_values)

Set array values in the eCognition project.

get_vector_layer(layer_name, map_name)

Get a vector layer with a given name.

create_vector_layer(layer_name, vector_type, map_name)

Create a new vector layer with a given name.

set_deep_learning_cpu_only(cpu_only)

Set whether Tensorflow should use only CPU.

set_cancel_analyze()

Set the flag to cancel the analysis.

reset_ruleset()

Reset the loaded ruleset.

Class VectorLayer

Class for eCognition vector layer.

Methods - Properties VectorLayer

name

Returns vector layer name of type string.

vector_type

Returns vector layer type.

is_3d_type

Returns True if the vector layer is 3D type, False otherwise.

Methods VectorLayer

get_attribute_definitions()

Get attribute names and types of the vector layer.

get_attribute_count()

Get the number of attributes of the vector layer.

get_vector_count()

Get the number of vectors in the vector layer.

get_vector(vector_idx)

Get a vector from the vector layer.

add_attribute_definition(attribute_name, attribute_type)

Add an attribute definition to the vector layer. This method must be called before adding vectors to the vector layer.

add_vector(

points: Sequence[tuple[float, float]],

attr_val: Sequence[Union[float, str]],

holes: Optional[Sequence[Sequence[tuple[float, float]]]])

Add a 2D vector with attribute values to the vector layer.

add_vector(

points: Sequence[tuple[float, float, float]],

attr_val: Sequence[Union[float, str]],

holes: Optional[Sequence[Sequence[tuple[float, float, float]]]])

Add a 3D vector with attribute values to the vector layer.

Class VectorType

Type of the vector layer geometry.

Class AttributeType

Type of the vector layer attribute.

Class VectorPoint

An eCognition vector point class.

Methods VectorPoint

is_3d_type

Returns True if the vector layer is 3D type, False otherwise.

get_attributes()

Get attribute name to value dictionary.

get_point_count()

Get the number of points in vector.

points()

Generator that yields points of the vector.

get_point()

Get a single point of the vector.

Class VectorLine

An eCognition vector line class.

Methods VectorLine

is_3d_type

Returns True if the vector layer is 3D type, False otherwise.

get_attributes()

Get attribute name to value dictionary.

get_point_count()

Get the number of points in vector.

points()

Generator that yields points of the vector.

Class VectorPolygon

An eCognition vector point class.

Methods VectorPolygon

is_3d_type

Returns True if the vector layer is 3D type, False otherwise.

get_attributes()

Get attribute name to value dictionary.

get_point_count()

Get the number of points in vector.

points()

Generator that yields points of the vector.

get_hole_count()

Get the number of inner holes in the vector.

get_hole_point_count(hole_idx)

Get the number of points in the inner hole.

get_hole_points(hole_idx)

Generator that yields points of the inner vector hole.

Class CameraFrameInformation

Camera information for each individual frame.

__init__(x, y, z, roll, pitch, heading)

Class CameraRotationOrder

The order of camera rotations.

Class CameraCoordinateSystem

The coordinate system the camera uses.

Class CameraImage

Camera image with camera frame information.

__init__(image_path, info)

Class CameraInformation

Camera information that all frames share.

__init__(pixel_size_x, pixel_size_y, width, height, focal_length_x, focal_length_y, principal_point_x, principal_point_y, rotation_order, coordinate_system)

Python External API Examples

In the following examples we introduce some basic approaches using eCognitions external API. The examples are installed together with the installation of eCognition Developer in <eCognition Developer installation directory>\bin\examples\python-external-api.

Overview

Precondition to use the examples:

make sure that the downloaded examples folder python_external_api is in a non-read-only location

create a new python virtual environment

install requirements.txt: pip install -r requirements.txt

Example - simple_processing_example.py

In this example an image in loaded and analyzed based on a simple rule set. The example shows the following main steps:

Load rule set multi-resolution.dcp

Load the image data Landast.tif

Create a project

Apply the rule set

to execute a branch of the rule set specify separating parent nodes with double slash (//)

to execute all processes from the ruleset, specify: ""

Save and close the project

import ecognitionapi as ecog

import os

# control logging level: "Nothing" / "Basic" / "Detailed" / "Everything"

os.environ["ECOG_CONFIG_logging"]="trace level=Basic"

def simple_project_example():

print("-----------------------------------------------")

print("Example: simple image/rule set execution pipeline. Load image and run rule set")

# create eCognition API

ecogApi = ecog.EcognitionApi(log_file_path=os.path.abspath("logs/engine.log"), license_server="@localhost")

# load rule set

ecogApi.load_ruleset(os.path.abspath("rulesets/multi-resolution.dcp"))

# add image

ecogApi.add_image(img_path = os.path.abspath("data/Landast.tif"))

# create a project

ecogApi.create_project()

# execute branch of the ruleset specified explicitly: separating parent nodes with double slash (//)

# to execute all processes from the ruleset, specify: ""

ecogApi.analyze("do//segmentation")

# save the project

ecogApi.save_project(os.path.abspath("results/project.dpr"))

# close the project

ecogApi.close_project()

# shutdown API

ecogApi.shutdown()

if __name__ == "__main__":

simple_project_example()

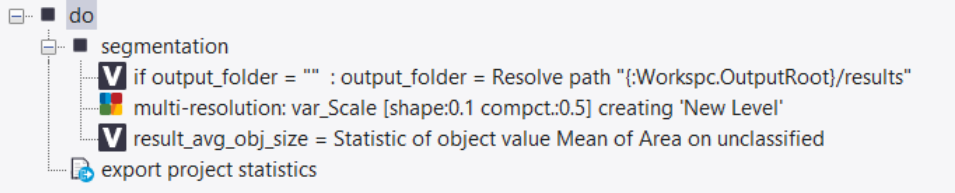

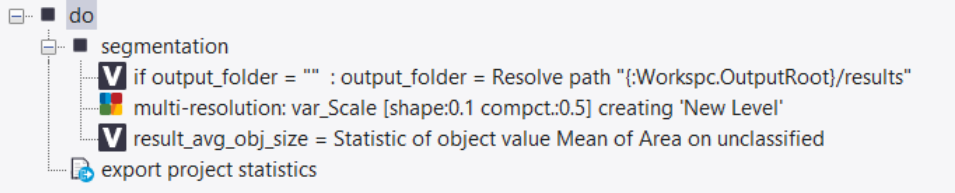

Example - variable_example.py

This example demonstrates how to pass parameters to rule set using Scene variables. It also demonstrates how a result value can be retrieved after the analysis using Scene variables. The example shows the following main steps:

Load rule set multi-resolution.dcp

Load the image data Landast.tif

Create a project

Define parameters in the rule set - set Scene variable (specified in the ruleset) that will control the output path for export statistics.csv

Apply the rule set

Get the result of the analysis

Close the project

import ecognitionapi as ecog

import os

# control logging level: "Nothing" / "Basic" / "Detailed" / "Everything"

os.environ["ECOG_CONFIG_logging"]="trace level=Basic"

def variable_example():

'''

This example demonstrates how to pass parameters to rule set using Scene variables

It also demonstrates how result value can be retrieved after analysis using Scene variables

'''

print("-----------------------------------------------")

print(f"Example: how to set input variable parameter and get output variable from the ruleset.")

# create eCognition API

ecogApi = ecog.EcognitionApi(log_file_path=os.path.abspath("logs/engine.log"), license_server="@localhost")

# load rule set

ecogApi.load_ruleset(os.path.abspath("rulesets/multi-resolution.dcp"))

# add image

ecogApi.add_image(img_path = os.path.abspath("data/Landast.tif"))

# create a project

ecogApi.create_project()

# set parameters to the rule set

# here we set Scene variable (specified in the ruleset) that will control output path for export statistics .csv

export_dir = os.path.abspath("results")

print(f"setting input parameters: output_folder and multi-resolution segmentation scale")

ecogApi.set_variable_value("output_folder", export_dir)

ecogApi.set_variable_value("var_Scale", 100)

# run rule set

ecogApi.analyze("do")

# get the analysis result

result = ecogApi.get_variable_value_float("result_avg_obj_size")

print(f"getting result: result_avg_obj_size={result}")

# close the project

ecogApi.close_project()

# shutdown API

ecogApi.shutdown()

if __name__ == "__main__":

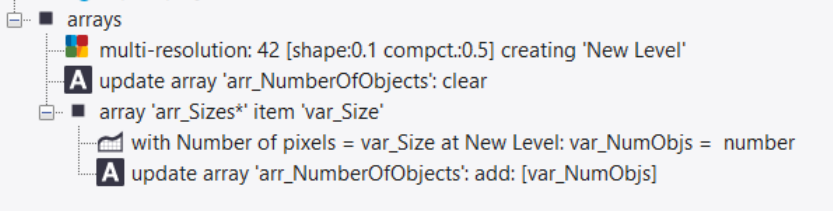

variable_example()Example - array_example.py

This example demonstrates how to pass array parameters to a rule set and how to retrieve a result array after the analysis.

The example shows the following main steps:

Load rule set arrays.dcp

Load the image data Landast.tif

Create a project

Initialize an input array arr_NumberOfObjects

Apply the rule set:

Multiresolution segmentation

Based on their size (feature number of pixels) image objects are assigned to the array 'arr_Sizes'

Objects with the same size are counted

Get the result of the analysis

Exemplary output:

size 20 pxls: #objects=849

size 21 pxls: #objects=811

size 22 pxls: #objects=792

size 23 pxls: #objects=744

size 24 pxls: #objects=724

Close the project

import ecognitionapi as ecog

import os

# control logging level: "Nothing" / "Basic" / "Detailed" / "Everything"

os.environ["ECOG_CONFIG_logging"]="trace level=Basic"

def array_example():

'''

This example demonstrates how to pass array parameters to rule set

and how to retrieve result array after analysis is finished

'''

print("-----------------------------------------------")

print(f"Example: how to set input array parameter and get output array from the ruleset.")

# create eCognition API

ecogApi = ecog.EcognitionApi(log_file_path=os.path.abspath("logs/engine.log"), license_server="@localhost")

# load rule set

ecogApi.load_ruleset(os.path.abspath("rulesets/arrays.dcp"))

# add image

ecogApi.add_image(img_path = os.path.abspath("data/Landast.tif"))

# create a project

ecogApi.create_project()

# set input array

arr_sizes = [i for i in range(20,25)]

ecogApi.set_array("arr_Sizes", arr_sizes)

# run rule set

ecogApi.analyze("")

# get the analysis result

print("after multi-resolution segmentation here are number of objects per size:")

num_objs = ecogApi.get_array_float("arr_NumberOfObjects")

for i in range(len(num_objs)):

print(f"size {arr_sizes[i]} pxls: #objects={int(num_objs[i])}")

# close the project

ecogApi.close_project()

# shutdown API

ecogApi.shutdown()

if __name__ == "__main__":

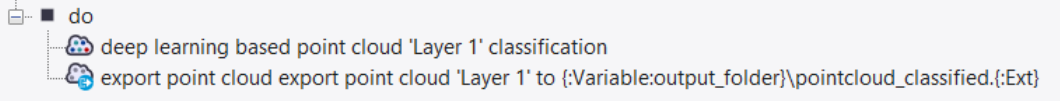

array_example()Example - pointcloud_example.py

This example demontrates how to apply a simple point cloud processing. The example shows the following main steps:

Load rule set pointcloud_classification.dcp

Load the point cloud data pointcloud.las

Create a project

Define an export directory

Apply the rule set

automatic deep learning based point cloud classification aerial model

export the results to the defined directory

Close the project

import ecognitionapi as ecog

import os

# control logging level: "Nothing" / "Basic" / "Detailed" / "Everything"

os.environ["ECOG_CONFIG_logging"]="trace level=Basic"

def pointcloud_example():

'''

This example demonstrates point cloud processing. Windows OS only!

'''

print("-----------------------------------------------")

print(f"Example: how to add and process point clouds. Windows OS only!")

# create eCognition API

ecogApi = ecog.EcognitionApi(log_file_path=os.path.abspath("logs/engine.log"), license_server="@localhost")

# load rule set

ecogApi.load_ruleset(os.path.abspath("rulesets/pointcloud_classification.dcp"))

# add point cloud

ecogApi.add_point_cloud(pc_path="data/pointcloud.las", )

# create project

ecogApi.create_project()

# set export directory

output_dir = os.path.abspath("results")

ecogApi.set_variable_value("output_folder", output_dir)

# run rule set

ecogApi.analyze("")

# close the project

ecogApi.close_project()

# shutdown API

ecogApi.shutdown()

if __name__ == "__main__":

pointcloud_example()Example - vector_example.py

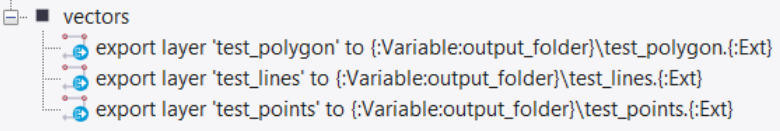

This example demontrates how to dynamically create and add vectors to a project. The example consists of the following main steps:

Load rule set vectors.dcp

Load the point cloud data pointcloud.las

Create an empty project

Define different 2D vectors

Set an export directory

Apply the rule set to export the created vector items

Close the project

import ecognitionapi as ecog

import os

# control logging level: "Nothing" / "Basic" / "Detailed" / "Everything"

os.environ["ECOG_CONFIG_logging"]="trace level=Basic"

def vectors_example():

'''

This example demonstrates how to dynamically create and add vectors to the project

'''

print("-----------------------------------------------")

print(f"Example: how to create vectors in eCognition project dynamically.")

# create eCognition API

ecogApi = ecog.EcognitionApi(log_file_path=os.path.abspath("logs/engine.log"), license_server="@localhost")

# load rule set

ecogApi.load_ruleset(os.path.abspath("rulesets/vectors.dcp"))

# create empty project without geocoding

ecogApi.create_project_set_size(0,0,1,1000,1000)

# create simple 2D polygon with a hole

vector_layer = ecogApi.create_vector_layer(layer_name="test_polygon", vector_type=ecog.VectorType.Polygon2D)

vector_layer.add_attribute_definition("test_attribute", ecog.AttributeType.String)

vector_layer.add_vector(points=[(100,100),(100,900),(900,900),(900,100),(100,100)], \

attr_val=["some_attr_value"], \

holes=[[(400,400),(400,600),(600,600),(600,400)]])

# create sample 2D point layer with 4 points

vector_layer = ecogApi.create_vector_layer(layer_name="test_points", vector_type=ecog.VectorType.Point2D)

vector_layer.add_vector(points=[(100,100),(100,900),(900,900),(900,100)], attr_val=[])

# create sample 2D line layer with 1 line

vector_layer = ecogApi.create_vector_layer(layer_name="test_lines", vector_type=ecog.VectorType.Line2D)

vector_layer.add_vector(points=[(100,100),(900,900)], attr_val=[])

# set export directory

output_dir = os.path.abspath("results")

ecogApi.set_variable_value("output_folder", output_dir)

# run rule set

ecogApi.analyze("")

# close the project

ecogApi.close_project()

# shutdown API

ecogApi.shutdown()

if __name__ == "__main__":

vectors_example()Example - maps_example.py

This example demonstrates the creation of a second map in a project and how to add data to that map. The worker map can be created explicitly as described in the code below or it will be created implicitly by 'add_image' call with the map_name parameter.

The example consists of the following main steps:

Create an empty project

Create an empty map called map2 with a defined size

Add an image to map2 - the map size remains as specified

Add a vector to map2

Save and close the project

import ecognitionapi as ecog

import os

# control logging level: "Nothing" / "Basic" / "Detailed" / "Everything"

os.environ["ECOG_CONFIG_logging"]="trace level=Basic"

def maps_example():

'''

This example demonstrates creation of second map in the project and

adding data to that map. The worker map can be created explicitly as in the code below

or it will be created implicitly by 'add_image' call with map_name parameter

'''

print("-----------------------------------------------")

print(f"Example: how to create maps in eCognition project dynamically.")

# create eCognition API

ecogApi = ecog.EcognitionApi(log_file_path=os.path.abspath("logs/engine.log"), license_server="@localhost")

# create empty project without geocoding (unused)

ecogApi.create_project_set_size(0,0,1,100,100)

# map2: create empty map 'map2' with the predefined size

ecogApi.add_map_set_size("map2",0,0,1,1544,1506)

# map2: add image, but map size remains as specified above

ecogApi.add_image(img_path = os.path.abspath("data/Landast.tif"), layer_name="Layer 1", map_name="map2")

# map2: add vector, 2D line layer

vector_layer = ecogApi.create_vector_layer(layer_name="test_lines", vector_type=ecog.VectorType.Line2D, map_name="map2")

vector_layer.add_vector(points=[(0,0),(1544,1506)], attr_val=[])

# save project

ecogApi.save_project(os.path.abspath("results/maps_example.dpr"))

# close the project

ecogApi.close_project()

# shutdown API

ecogApi.shutdown()

if __name__ == "__main__":

maps_example()

For more information see also:

Embedded Python eCognition API:

Installation Guide > Windows > Python Installation - installation and setup

Reference Book > Algorithms and Processes > Miscellaneous > Python Script - description algorithm and its parameters

Reference Book > Algorithms and Processes > Miscellaneous > Embedded Python API Reference - reference for each class, properties and methods

User Guide > Python Integration - application examples for python scripts and Debugging python code in eCognition Developer